NeuProWorld

Model-based reinforcement learning with neural stochastic differential equations

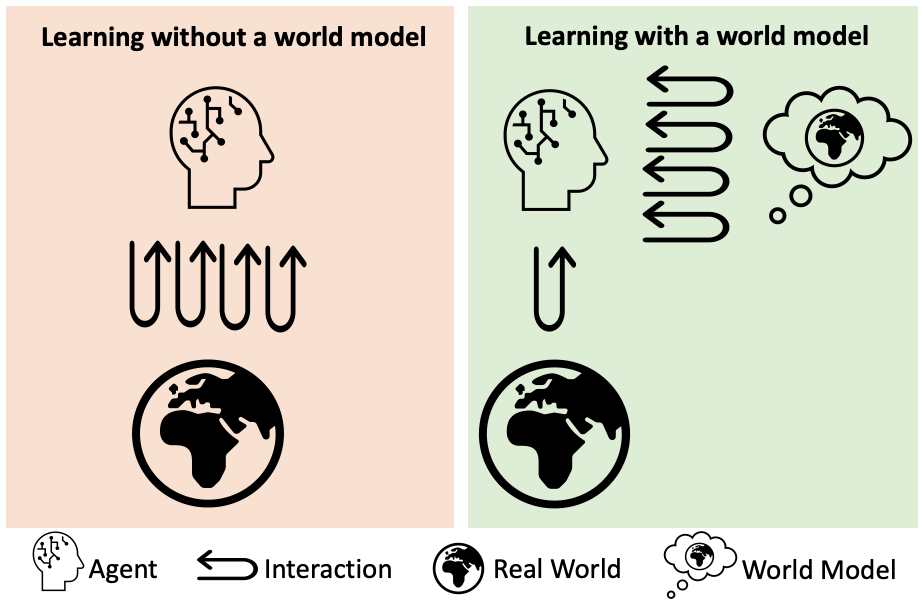

Technological challenge. Intelligent autonomous agents that interact with their environment, such as drones, self-driving cars, and robot arms, are shaping our technological future. Although current technologies can deliver vast sensorimotor capabilities to cyber-physical systems, we still cannot unravel their potential because we are lacking an algorithmic framework to train them for complex tasks without excessively many trial-and-error rounds. Drones can do surveillance but cannot deliver parcels, self-driving cars can keep straight lanes on the highway but cannot navigate safely in the urban areas, robot arms can sort screws but cannot do high-precision surgery. Once we identify the hidden properties of current reinforcement learning causing pronounced data hunger, we will understand which new assumptions would make intelligent agents excel their tasks. Such an achievement would cause a quantum leap in autonomous technologies and increase safety as well as sustainability in a variety of real-world tasks including transportation, production, and health care.

Goal. To explain how the dependency of reinforcement learning on excessive trial-and-error can be identified and circumvented.